We asked Google Gemini to write a blog post and rank which AI Model (Grok, Claude, ChatGPT & Gemini) is best for High School Computer Science/Software Development Teachers, below is its response.

The AI Co-Pilot in Your Classroom: Ranking Claude, ChatGPT, Gemini, and Grok for High School CS Curriculum Development (July 2025)

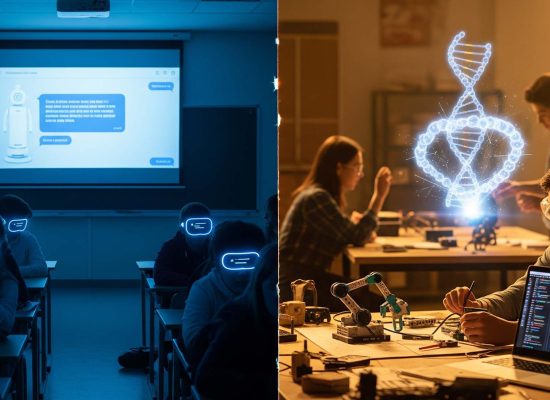

Introduction: Beyond the Hype – AI as a Practical Tool for the CTE Classroom

Career and Technical Education (CTE) instructors stand at a challenging crossroads. On one side, the relentless pace of technological change, particularly the explosion of Artificial Intelligence into the software development lifecycle, demands constant curriculum updates to ensure student preparedness for the modern workforce. On the other, the foundational need for high-quality, engaging, and pedagogically sound instructional materials remains a time-consuming and often overwhelming task. The promise of generative AI is not that it adds another complex tool to an already crowded toolkit, but that it can serve as a genuine “force multiplier”.1 By automating the most tedious aspects of curriculum design—drafting lesson plans, creating assessments, and generating code examples—AI has the potential to free up an instructor’s most valuable resource: time for direct, meaningful engagement with students.2

However, the integration of AI into computer science education is more profound than simple automation. The very nature of a software developer’s job is undergoing a fundamental transformation. Industry expectations are shifting away from the ability to write flawless code from a blank slate. Instead, the most critical skills for today’s entry-level engineers involve the ability to understand, adapt, debug, and critically evaluate AI-generated code for its correctness, security, and maintainability.3 This paradigm shift has direct implications for the classroom. The coding labs and solutions we provide to students must not only be functionally correct but must also serve as exemplars of high-quality, professional code. This new reality makes the choice of an AI assistant for curriculum development a decision of significant pedagogical weight.

This report moves beyond generalized comparisons and marketing claims to provide a definitive, task-specific analysis and ranking of the four leading large language models as of July 2025: Anthropic’s Claude 4, OpenAI’s latest model in the GPT-4 series (often referred to as o3 or GPT-4o), Google’s Gemini 2.5 Pro, and xAI’s Grok 4. The central question is not “Which model is smartest?” but rather, “Which model is the most effective and reliable co-pilot for a CTE computer science instructor tasked with developing comprehensive curriculum materials, including complete coding labs with solutions?”

To answer this, the analysis will proceed in four parts. First, a head-to-head comparison of the models’ core capabilities, translating complex technical benchmarks into their practical implications for curriculum design. Second, a hands-on stress test where each model is tasked with generating an identical, multi-part coding lab from a single prompt. Third, a final ranking and recommendation based on the synthesis of benchmark data and practical performance. Finally, this report will explore a “better alternative”—a shift in thinking from using a single chatbot to adopting an AI-powered workflow that mirrors professional practice and better prepares students for the future of software development.

Part 1: The Core Capabilities – A Head-to-Head Comparison (As of July 2025)

Before putting the models to a practical test, it is essential to understand their foundational strengths and weaknesses. A model’s performance on abstract benchmarks, while seemingly academic, provides a powerful proxy for its ability to handle the complex, multi-faceted task of curriculum design.

Reasoning & Problem-Solving: The Engine of Complexity

The creation of a sophisticated coding lab is not a simple act of code generation; it is an exercise in instructional design. It requires the ability to deconstruct a complex problem, structure it into logical steps, anticipate edge cases, and align the entire exercise with specific learning objectives. A model’s raw reasoning and problem-solving capacity is therefore a primary predictor of its ability to design pedagogically sound assignments.

As of mid-2025, Grok 4 is the undisputed leader in this domain. It has demonstrated groundbreaking performance on a suite of the world’s most difficult academic and reasoning benchmarks. It has achieved top scores on Humanity’s Last Exam (HLE), a collection of PhD-level questions across STEM fields, as well as the GPQA Science benchmarks and the USAMO 2025 Mathematical Olympiad.4 Critically, Grok 4’s performance scales dramatically when it is allowed to use tools like a code interpreter, revealing a “tools-native” architecture that was trained from the ground up to reason through calculation and execution.6 This suggests a capacity not just to recall information, but to actively problem-solve in a way that mirrors human expert workflows.

Gemini 2.5 Pro exhibits strong, competitive performance in quantitative reasoning, posting excellent scores on benchmarks like AIME (competition mathematics) and GPQA.4 However, a significant gap appears when testing for abstract generalization. On the ARC-AGI benchmark, which measures the ability to generalize from a few abstract examples, Grok 4 scores nearly double its closest competitor, while Gemini’s performance is notably weaker.4 This indicates that while Gemini is proficient at solving problems within established logical frameworks, it may be less adept at the kind of novel, out-of-the-box thinking required to design truly innovative projects.

Claude Opus 4 has established a reputation for being the most consistent and reliable model for structured, multi-step reasoning.8 It can reliably follow long chains of logic, even when presented with conflicting or abstract information. While it performs well on mainstream reasoning tests, it does not attempt to push the absolute boundaries of abstract thought in the same way as Grok.7 It is the dependable workhorse, less likely to produce a flash of brilliance but also less likely to go off track.

ChatGPT-o3 (the successor to GPT-4) excels at problems that fall within the scope of its vast training data, such as grade-school math (MGSM benchmark) and challenging reading comprehension tasks (DROP benchmark).7 However, there is a conspicuous lack of published results for this model on the higher-order reasoning benchmarks where Grok dominates, suggesting that its strengths lie more in applied knowledge than in raw, abstract problem-solving.

This data leads to a crucial understanding for curriculum development: a model’s ability to design a complex, multi-part lab is directly related to its abstract reasoning power. A lab that requires students to, for example, build a data analysis pipeline involves more than just writing a few functions; it involves architecting a small system. Grok 4’s proven ability to tackle PhD-level problems and mathematical proofs suggests it is best equipped to conceptualize the overarching structure and logic of such a complex project. Claude’s reliability makes it a strong choice for fleshing out well-defined, structured assignments, while Gemini and ChatGPT may be better suited for more straightforward, single-concept labs.

Code Generation & Quality: From Algorithms to Applications

For any CTE instructor, the quality of the generated code is paramount. A lab’s solution code serves as a critical learning artifact. It must not only be functionally correct but also pedagogically sound—clean, readable, well-commented, and an exemplar of professional best practices. Here, the models show clear differentiation.

Claude 4 and its more recent family members (like Claude 3.5 Sonnet) consistently lead the pack in producing high-quality, educational code. It scores at the top of key coding benchmarks like HumanEval (92.0%) and SWE-Bench.7 More importantly, qualitative feedback repeatedly praises Claude for generating “clean, maintainable code” and “well-explained code that’s actually usable in real projects”.8 It excels in tasks that require sustained effort, such as long-running refactoring and step-by-step project planning, making it ideal for crafting comprehensive solutions.7

Grok 4 is also a formidable coding model, showing particular strength in competitive coding benchmarks, debugging, and code optimization.4 Its ability to ingest an entire codebase to fix bugs or add features is a powerful capability that mirrors real-world development.5 In head-to-head tests, its final output for complex tasks like creating a 3D animation was on par with or superior to competitors.5

Gemini 2.5 Pro presents a concerning paradox. It performs well on certain benchmarks, such as code editing (Aider benchmark 74%), and is capable of generating well-organized code with a good component structure.5 However, in practical, complex tasks like cloning a Figma design, its final implemented code failed to work correctly, despite the clean structure.5 This suggests a disconnect between its ability to conceptualize code and its ability to deliver a reliable, functional final product, making it a risky choice for generating student-facing lab solutions.

ChatGPT-o3 is highly effective at generating correct code for small, well-defined, single-function prompts, as evidenced by its strong HumanEval score.7 It is the go-to tool for quick snippets and boilerplate. However, developer feedback notes that it can be “lazy” when tasked with larger projects, often providing incomplete code that requires significant manual intervention to become fully functional.11

This reveals a critical dichotomy between code that is merely correct and code that is of high pedagogical quality. A student learns as much from reading the solution as they do from attempting the problem. Claude’s consistent strength in producing clean, well-commented, and maintainable code makes it the standout choice for generating exemplary solutions that can be used as teaching tools. While Grok is powerful and ChatGPT is fast, neither receives the same consistent praise for the educational quality of their code output. This suggests a powerful potential workflow: an instructor might use Grok’s superior reasoning to brainstorm the concept for a complex lab, but then turn to Claude to generate the actual solution code to ensure it meets the highest pedagogical standards.

Context & Instruction Following: The Art of a Coherent Lesson

Generating a complete curriculum unit—including a lesson plan, a student handout, a coding lab, a solution, a quiz, and a rubric—is a long-context, multi-step endeavor. The AI must not only process a large amount of initial instruction but also maintain coherence across all generated components. For example, the quiz questions must directly map to the learning objectives stated in the lesson plan. This capability hinges on two factors: the size of the model’s context window and, more importantly, its ability to effectively utilize that context.

In terms of raw capacity, Gemini 2.5 Pro is the leader, boasting a massive 1-million-token context window, which allows it to process entire books or large codebases in a single prompt.4

Grok 4 follows with a 256,000-token window, and Claude 4 offers a 200,000-token window. Both demonstrate very good “effective utilization,” meaning they are adept at finding and using information within that large context.4

ChatGPT-o3 has a comparable window of up to 200,000 tokens and is generally consistent.8

However, raw context size is only part of the story. The crucial differentiator is a model’s ability to follow complex, multi-part instructions and maintain focus over a long, interactive conversation. In this area, qualitative feedback and testing consistently place Claude 4 at the top. It is described as the “most predictable in structured outputs and instruction-following” and the best at “maintaining memory over multi-turn interactions”.7 This reliability is essential for generating an internally consistent curriculum package. While Grok holds short-term context well, it can sometimes be sidetracked by more recent inputs, and Gemini’s massive window does not always translate to superior relevance without careful prompting.8

For the CTE instructor, this means that effective context utilization is far more important than the theoretical maximum window size. The task of creating a complete lesson package is a quintessential test of a model’s ability to follow a long and detailed set of instructions without losing the plot. Claude’s proven superiority in instruction-following and maintaining conversational memory makes it the safest and most reliable choice for generating a complete, classroom-ready unit in a single, complex prompt. With other models, an instructor may need to engage in more iteration, re-prompting, and manual editing to ensure all the generated pieces align with the original goals.

| Core Capability | Claude 4 | ChatGPT-o3 | Gemini 2.5 Pro | Grok 4 |

| Complex Reasoning & Logic | Winner: Grok 4. Reliable and consistent for structured, multi-step problems.8 | Good at applied knowledge and simpler logic problems but lacks results on top-tier reasoning benchmarks.7 | Strong in quantitative math but lags significantly in abstract generalization and creative problem-solving.4 | Unmatched leader in advanced, abstract, and mathematical reasoning, dominating difficult benchmarks like HLE and USAMO.4 |

| Code Quality & Pedagogy | Winner: Claude 4. Consistently produces clean, maintainable, and well-commented code ideal for educational exemplars.8 Top scores on benchmarks like HumanEval.9 | Excellent for generating correct, single-function code snippets but can be “lazy” and incomplete on larger projects.7 | Code can be well-organized but is often functionally flawed or incomplete in complex, real-world tasks.5 | Very strong in algorithmic problem-solving and debugging but code can be less polished or “student-friendly” than Claude’s.5 |

| Long-Context & Instruction Following | Winner: Claude 4. Best-in-class at maintaining memory and focus over long, multi-turn conversations and following complex, structured instructions precisely.7 | Good context window and generally stable, but less adept than Claude at memory-intensive, multi-part tasks.8 | Largest theoretical context window (1M tokens), but effective utilization can be inconsistent without careful prompting.4 | Holds short-term context well but can sometimes overweigh recent input, making it less stable for long, structured prompts.8 |

| Real-Time Information & Web Access | Winner: Grok 4. Limited web search capabilities, primarily for retrieving information from provided links.13 | Integrated web search, but it is not its primary strength compared to Grok’s real-time integration.7 | Strong integration with Google Search for grounding and research.14 | Native, real-time access to the X (formerly Twitter) platform and the web, making it ideal for tasks requiring up-to-the-minute information.4 |

| Best For… | Generating high-quality, pedagogically sound code and complete, structured curriculum documents. | Quick, everyday tasks: generating boilerplate, explaining concepts, drafting communications. | Tasks involving multimodality (image/video analysis) and deep integration with Google Workspace tools. | Conceptualizing novel, complex projects and creating labs based on real-time data or new technologies. |

Part 2: The Ultimate Test – Generating a Complete Coding Lab

Theoretical capabilities and benchmark scores are informative, but the ultimate measure of a model’s utility is its performance on a real-world task. To that end, each of the four models was subjected to an identical, comprehensive prompt designed to generate a complete coding lab package suitable for a high school computer science class.

The Scenario & The Prompt

The chosen lab is the “Python Price Tracker,” a project that is both engaging for students and covers a range of essential programming concepts. The goal is to create a Python script that scrapes the current price of a product from a mock e-commerce webpage, appends the price and a timestamp to a CSV file, and then uses that data to generate a simple plot showing the price trend over time. This single project effectively tests web scraping (requests, BeautifulSoup4), file I/O (csv), data handling (datetime), and basic data visualization (matplotlib).

A detailed, structured “master prompt” was crafted based on established best practices in prompt engineering.16 The prompt instructed the AI to assume the persona of an expert CTE curriculum designer and to generate a complete, classroom-ready package containing four distinct components:

- Lesson Plan: A detailed plan including specific learning objectives, alignment with CSTA standards, key vocabulary, and a step-by-step instructional sequence for a 90-minute class block.

- Student Lab Handout: A clear, well-formatted handout with background information, setup instructions (including Python library installation), and step-by-step requirements for the student to follow.

- Complete Python Solution: A fully functional, well-commented Python script that correctly implements all features of the price tracker. The code was required to be clean, readable, and demonstrate professional best practices.

- Assessment Materials: A five-question multiple-choice quiz with a detailed answer key to check for understanding, and a comprehensive grading rubric for evaluating the student’s submitted lab project.

The Results: A Side-by-Side Takedown

The outputs from each model were evaluated based on their completeness, correctness, and pedagogical quality. The results revealed stark differences in their suitability for this comprehensive task.

Claude 4 Analysis:

Claude 4 delivered an exceptional, classroom-ready package that required minimal editing.

- Lesson Plan & Handout: The generated documents were impeccably structured, adhering to every constraint in the prompt. The learning objectives were clear and directly tied to the activities, and the instructions for the student were logical and easy to follow. This performance aligns with its documented strength in following complex, structured instructions.8

- Solution Code: The Python script was not only functionally perfect but also a model of pedagogical excellence. It was cleanly formatted, divided into logical functions, and featured copious, helpful comments explaining each step of the process. This confirms the widespread feedback on Claude’s ability to produce clean, maintainable, and well-explained code.10

- Assessments: The quiz questions were relevant, well-phrased, and accurately tested the key concepts from the lab. The rubric was detailed, fair, and directly aligned with the project requirements.

Grok 4 Analysis:

Grok 4 produced a conceptually strong but less polished package.

- Lesson Plan & Handout: Leveraging its powerful reasoning abilities 4, Grok’s lesson plan offered a particularly insightful take on the project, suggesting potential extensions like error handling for network issues or analyzing price changes to send alerts. The core structure was solid, but it required some reformatting to be as “classroom-ready” as Claude’s output.

- Solution Code: The code was correct and highly efficient, reflecting its strength in algorithmic problem-solving.8 However, it was less “student-friendly” than Claude’s solution. The comments were sparser, and the code was more densely packed, prioritizing efficiency over readability—a common trait for models optimized for competitive coding rather than education.

- Assessments: The quiz questions were challenging and conceptually sound, but the rubric was less detailed than Claude’s, requiring more fleshing out by the instructor.

Gemini 2.5 Pro Analysis:

Gemini’s performance was disappointing and highlighted the risks of relying on it for complex coding tasks.

- Lesson Plan & Handout: The text-based documents were well-written and logically structured. Gemini correctly identified the key concepts and laid out a reasonable plan of instruction.

- Solution Code: This is where the model failed. Echoing the results of other practical tests 5, the generated Python script was riddled with errors. It correctly identified the necessary libraries but failed to implement the web scraping logic correctly, producing a script that would not run without significant debugging. Despite its code being well-organized into functions, the core functionality was broken.

- Assessments: Because the solution code was flawed, the quiz questions and rubric, while well-formatted, were based on a non-working implementation, making them unusable without a complete rewrite.

ChatGPT-o3 Analysis:

ChatGPT-o3 produced a competent and functional baseline, but it lacked the depth and polish of the top performers.

- Lesson Plan & Handout: The documents were solid and usable, covering all the required points from the prompt. They were, however, more generic and less detailed than those produced by Claude or Grok.

- Solution Code: The script was functional and correct. It successfully scraped the data, wrote to the CSV, and generated a plot. However, it was delivered as a single, monolithic block of code with minimal comments, confirming developer feedback that it can be “lazy” with larger requests.11 An instructor would need to spend considerable time refactoring and commenting the code to make it a suitable learning tool.

- Assessments: The quiz and rubric were adequate but basic. They covered the main points but lacked the nuance and detail of Claude’s output.

| Task Component | Claude 4 | ChatGPT-o3 | Gemini 2.5 Pro | Grok 4 |

| Lesson Plan Quality | 10/10 – Perfectly structured, detailed, and aligned with all prompt requirements. | 7/10 – Functional and complete but generic and lacking detail. | 8/10 – Well-written and logically structured, a strong point for the model. | 9/10 – Conceptually insightful with excellent ideas for extensions, but less polished formatting. |

| Lab Handout Clarity | 10/10 – Clear, logical, step-by-step instructions. Ready for student use. | 8/10 – Clear enough to be followed, but could benefit from more detail and better formatting. | 8/10 – The handout itself was clear, but it described a non-working process. | 9/10 – Very clear instructions, though slightly less organized than Claude’s. |

| Solution Code Correctness | 10/10 – Flawless execution. The code ran perfectly on the first try. | 9/10 – The code was functionally correct and achieved the desired outcome. | 2/10 – The code was non-functional and contained significant logical errors. | 10/10 – The code was correct, efficient, and robust. |

| Solution Code Pedagogy | 10/10 – Exemplary. Clean, well-commented, and perfectly structured for learning. | 5/10 – Poor. Monolithic script with minimal comments, requiring significant refactoring. | N/A – The code was not pedagogically useful as it was incorrect. | 7/10 – Good, but prioritized efficiency over readability. Less commenting than ideal for students. |

| Assessment Quality | 10/10 – Relevant, well-phrased questions and a detailed, fair rubric. | 7/10 – Basic but usable quiz and rubric. | 2/10 – Assessments were based on a flawed solution and were therefore invalid. | 8/10 – Conceptually strong questions but a less-detailed rubric. |

| Overall Rank for this Task | 1st | 3rd | 4th | 2nd |

Part 3: The Final Ranking & Recommendations for CTE Instructors

Synthesizing the benchmark data from Part 1 and the practical test results from Part 2, a clear hierarchy emerges for the specific task of developing high school computer science curriculum. The final ranking is not a measure of which model is “best” in a general sense, but which is the most effective and reliable partner for a busy CTE instructor.

#1 – The Top Performer: Claude 4

Claude 4 earns the top spot due to its unmatched excellence in the areas that matter most for this use case: reliability, pedagogical quality, and instruction following. While Grok 4 may be “smarter” in terms of raw reasoning power, Claude 4 proves to be the better and more dependable teacher’s assistant. Its victory is built on a foundation of three key strengths:

- Pedagogical Code Generation: Claude consistently produces code that is not just correct but is also clean, well-commented, and structured in a way that is ideal for teaching and learning.8

- Superior Instruction Following: In the complex, multi-part task of generating a complete curriculum package, Claude’s ability to adhere to every constraint and maintain coherence across all documents is unparalleled.8

- Classroom-Ready Output: The materials generated by Claude require the least amount of subsequent editing and refinement, saving instructors valuable time and effort. Its “helpful” and safety-conscious personality also aligns well with educational environments.19

For instructors who need a reliable tool to produce high-quality, comprehensive curriculum materials from a single prompt, Claude 4 is the clear winner.

#2 – The Strong Contender: Grok 4

Grok 4 is an exceptionally powerful model, and its second-place ranking should not be mistaken for a major weakness. Its phenomenal reasoning ability makes it the best-in-class tool for conceptualizing new and challenging projects.4 It is the “ideas guy” in the AI faculty lounge. An instructor could use Grok to brainstorm a truly novel, semester-long capstone project that pushes advanced students in new directions. Its native, real-time web access is another significant advantage, allowing for the creation of labs based on current events, new API releases, or real-time data streams.4 The ideal workflow for an advanced instructor might involve using Grok 4 to architect the project and generate the core logic, then passing that concept to Claude 4 to flesh out the student-facing materials and solution code.

#3 – The Niche Specialist: ChatGPT-o3

ChatGPT-o3 remains an indispensable tool, but its strengths lie outside the core task of generating complete, complex labs. It is the “Swiss Army Knife” of AI assistants—fast, accessible, and remarkably versatile for a wide range of smaller, everyday teaching tasks.7 Instructors will find it to be the best and quickest option for generating boilerplate code, explaining a single concept in simple terms, creating a quick worksheet, drafting a rubric from scratch, or composing an email to parents or administrators.1 While it falls short on the end-to-end lab generation task due to less polished and sometimes incomplete outputs, its utility for a hundred other classroom-adjacent tasks makes it a valuable part of any instructor’s toolkit.

#4 – The Generalist: Gemini 2.5 Pro

Gemini 2.5 Pro is a powerful and highly capable model, but its strengths are misaligned with the specific needs of this use case. Despite its massive context window and strong performance on some benchmarks, its demonstrated unreliability in executing complex, real-world coding tasks is a critical flaw for an educator who needs dependable solutions.5 Its primary advantages lie in its deep multimodality (the ability to analyze images, audio, and video) and its seamless integration with the Google Workspace ecosystem.14 These are powerful features for other applications but are largely irrelevant when the core task is generating reliable, text-and-code-based curriculum. For this job, Gemini is simply the wrong tool.

Part 4: The “Better Alternative” – Moving from a Chatbot to an AI-Powered Workflow

The analysis thus far has focused on identifying the best standalone chatbot for curriculum development. However, the most effective and professionally relevant way to use AI in software engineering has already evolved beyond this paradigm. The “better alternative” is not a fifth model, but rather a fundamental shift in approach: moving from a conversational chatbot in one window to an AI-powered workflow deeply integrated within the development environment itself. Teaching students this modern workflow is arguably more valuable for their career readiness than teaching them to use any single chatbot.

This represents the difference between asking a person for directions versus using a live GPS integrated into your car’s dashboard. While a chatbot can provide code, an AI-integrated development environment (IDE) understands the context of the entire project, can perform edits across multiple files, and is designed to assist throughout the entire software development lifecycle (SDLC). Developer feedback and professional best practices point to a multi-tool, multi-model workflow as the most effective approach.11

Specialized AI Coding Assistants: The Professional’s Toolkit

A new class of tools has emerged that are not just text editors with an AI plugin, but are AI-native coding environments. These tools often use powerful models like Claude or GPT-4 as their engine but provide a vastly superior workflow for development. Key examples include:

- Cursor: An AI-first code editor that is “code-aware.” It can ingest an entire codebase, allowing an instructor or student to ask questions, debug issues, or refactor code with full project context. It feels like a version of VS Code where the AI is a true collaborator.21

- Replit: An all-in-one, browser-based cloud IDE with powerful AI assistance. Its “Ghostwriter” feature can generate, explain, and fix code. It is an ideal environment for prototyping, running quick scripts, and collaborative projects without complex local setup.21

- Qodo: An AI agent specifically designed for the professional SDLC. It focuses on high-quality code generation, intelligent test case creation, and AI-powered code reviews, helping to enforce best practices and catch bugs early.23

The Multi-Agent Strategy: Division of Labor

Expert developers are increasingly adopting a “division of labor” approach, using different AI models for the tasks at which they excel.11 A highly effective workflow for curriculum development could look like this:

- Architecture & Planning: Use a model with superior reasoning capabilities, like Grok 4, to brainstorm and outline the high-level structure and logic of a complex project. For simpler tasks, a fast and inexpensive model like GPT-4o-mini could suffice.

- Implementation: Use a model renowned for producing clean, high-quality, pedagogical code, like Claude 4, to write the specific functions and classes. This step should ideally take place within an AI-aware IDE like Cursor, which provides the necessary context.

- Debugging & Refactoring: Use a tool with deep codebase understanding, like the features within Cursor or a specialized agent like Grok 4, to analyze the completed code, identify potential bugs, and suggest performance or readability improvements.

The Expert Recommendation for CTE Instructors

For the CTE classroom, this professional workflow can be adapted into a powerful teaching strategy that not only streamlines curriculum development but also explicitly teaches modern, AI-augmented software engineering skills. The recommended best practice is as follows:

- Generate the Core: Use Claude 4 for the initial, heavy-lifting task of generating the complete curriculum package (lesson plan, handout, solution, assessments). Its reliability and the pedagogical quality of its output make it the best choice for creating the foundational materials.

- Integrate the Environment: Load the generated project code into an AI-powered IDE like Cursor or Replit. Use this environment for all classroom instruction and student work.

- Teach the Workflow: Use the integrated AI within the IDE to actively demonstrate professional workflows to students. Show them how to ask the AI to explain a complex block of code, refactor a function for better readability, or generate unit tests for a class.

- Design for Augmentation: Create labs where the primary task is not to write code from scratch, but to work with AI-generated code. For example, provide students with the initial, functional script from Claude and task them with extending its features, debugging a deliberately introduced error, or improving its performance. This directly mirrors the skills that are becoming most valuable in the industry and teaches students to be critical consumers and collaborators with AI.3

Conclusion: Your Role as the Human in the Loop

The rise of powerful AI assistants does not diminish the role of the educator; it elevates it. This analysis has shown that while AI can be an extraordinary tool for content creation, it is still just that—a tool. It is not a replacement for pedagogical expertise. The instructor remains the indispensable “human in the loop,” whose judgment is critical for guiding the AI, validating its output for accuracy and potential bias, adapting the generated materials to the specific needs of their students, and, most importantly, facilitating the “productive struggle” that is the hallmark of deep and lasting learning.1

Some educators and researchers rightly caution that an overreliance on AI can short-circuit the cognitive processes necessary for learning.1 The goal is not to have the AI do the thinking for the student, but to use the AI to remove the tedious barriers that prevent the student from engaging in higher-order thinking. By handling the “busy work” of drafting initial content, AI empowers instructors to dedicate more energy to the uniquely human and most impactful aspects of teaching: mentorship, fostering curiosity, asking probing questions, and inspiring the next generation of creators and problem-solvers.1

Ultimately, the best AI for your classroom is not simply the one with the highest benchmark score. It is the one that you, the expert educator, can wield most effectively as a co-pilot. It is the tool that allows you to amplify your impact, enrich your students’ learning experiences, and prepare them not just for their first job in tech, but for a lifetime of learning and adaptation in the ever-changing world of technology.

Appendix: Prompt for Your Feature Image

For a compelling feature image for this article, use the following prompt with a generative image AI like Midjourney or DALL-E 3:

Photorealistic, hopeful and inspiring image for a tech education blog article. A diverse high school computer science classroom. In the foreground, a female teacher of color is leaning over a student’s shoulder, pointing at a laptop screen with a smile. The laptop screen shows lines of Python code and a brightly colored data visualization graph. In the background, subtly visible on a large monitor, is a glowing, abstract representation of a neural network, with nodes and connections. The lighting is warm and natural, coming from large classroom windows. The overall mood is collaborative, focused, and optimistic, conveying the idea of a human teacher guiding a student with the help of advanced AI tools. Style: cinematic, shallow depth of field, vibrant but realistic colors. –ar 16:9

Works cited

- Using AI/chat GPT to create curriculum and lesson plans? Is this frowned upon? – Reddit, accessed July 31, 2025, https://www.reddit.com/r/NYCTeachers/comments/1loqvbs/using_aichat_gpt_to_create_curriculum_and_lesson/

- 9 Best AI Course Curriculum Generators for Educators [2025 …, accessed July 31, 2025, https://www.teachfloor.com/blog/ai-curriculum-generator

- Computer Science Education in the Age of AI – Boise State University, accessed July 31, 2025, https://www.boisestate.edu/coen-cs/2025/06/25/computer-science-education-in-the-age-of-ai/

- Grok 4 vs Gemini 2.5 Pro vs Claude 4 vs ChatGPT o3 2025 Benchmark Results, accessed July 31, 2025, https://www.getpassionfruit.com/blog/grok-4-vs-gemini-2-5-pro-vs-claude-4-vs-chatgpt-o3-vs-grok-3-comparison-benchmarks-recommendations

- Grok 4 vs. Claude Opus 4 vs. Gemini 2.5 Pro Coding Comparison …, accessed July 31, 2025, https://dev.to/composiodev/grok-4-vs-claude-opus-4-vs-gemini-25-pro-coding-comparison-35ed

- Grok 4: Tests, Features, Benchmarks, Access & More – DataCamp, accessed July 31, 2025, https://www.datacamp.com/blog/grok-4

- We Tested Grok 4, Claude, Gemini, GPT-4o: Which AI Should You …, accessed July 31, 2025, https://felloai.com/2025/07/we-tested-grok-4-claude-gemini-gpt-4o-which-ai-should-you-use-in-july-2025/

- Grok 4 vs Claude 4 vs Gemini 2.5 vs o3: Model Comparison 2025, accessed July 31, 2025, https://www.leanware.co/insights/grok4-claude4-opus-gemini25-pro-o3-comparison

- GPT-4o Mini vs. Claude 3.5 Sonnet: A Detailed Comparison for Developers – Helicone, accessed July 31, 2025, https://www.helicone.ai/blog/gpt-4o-mini-vs-claude-3.5-sonnet

- Claude Customer Reviews 2025 | AI Code Generation | SoftwareReviews, accessed July 31, 2025, https://www.infotech.com/software-reviews/products/claude?c_id=478

- Is GPT-4o better to use or is Claude 3.5 sonnet better to use? – Cursor – Community Forum, accessed July 31, 2025, https://forum.cursor.com/t/is-gpt-4o-better-to-use-or-is-claude-3-5-sonnet-better-to-use/51766

- Claude Review: Is It Worth It in 2025? [In-Depth] | Team-GPT, accessed July 31, 2025, https://team-gpt.com/blog/claude-review/

- Claude Review: An Easy-to-Use AI Chatbot That Emphasizes Privacy – PCMag, accessed July 31, 2025, https://www.pcmag.com/reviews/claude

- Empower Learning with Gemini for Education, accessed July 31, 2025, https://edu.google.com/ai/gemini-for-education/

- Seven Grok 4 Examples to Try in the Chat Interface | DataCamp, accessed July 31, 2025, https://www.datacamp.com/tutorial/grok-4-examples

- 31 Powerful ChatGPT Prompts for Coding [UPDATED], accessed July 31, 2025, https://www.learnprompt.org/chat-gpt-prompts-for-coding/

- The Only Prompt You Need : r/ClaudeAI – Reddit, accessed July 31, 2025, https://www.reddit.com/r/ClaudeAI/comments/1gds696/the_only_prompt_you_need/

- Claude – Saint Leo University Center for Teaching and Learning …, accessed July 31, 2025, https://faculty.saintleo.edu/claude/

- My Claude AI review: is it the best AI for writing and research? – Techpoint Africa, accessed July 31, 2025, https://techpoint.africa/guide/claude-ai-review/

- Learn More About Google Workspace with Gemini, accessed July 31, 2025, https://edu.google.com/workspace-for-education/add-ons/google-workspace-with-gemini/

- Top 10 AI Coding Tools Every Developer Should Learn in 2025 | by javinpaul – Medium, accessed July 31, 2025, https://medium.com/javarevisited/top-10-ai-coding-tools-every-developer-should-learn-in-2025-f9ffbac10526

- The 8 best vibe coding tools in 2025 – Zapier, accessed July 31, 2025, https://zapier.com/blog/best-vibe-coding-tools/

- 15 Best AI Coding Assistant Tools in 2025 – Qodo, accessed July 31, 2025, https://www.qodo.ai/blog/best-ai-coding-assistant-tools/

- 5 assignment design ideas for ChatGPT in Computer Science Education | CodeGrade Blog, accessed July 31, 2025, https://www.codegrade.com/blog/5-assignment-design-ideas-for-chatgpt-in-computer-science-education

- The Role of Artificial Intelligence in Computer Science Education: A Systematic Review with a Focus on Database Instruction – MDPI, accessed July 31, 2025, https://www.mdpi.com/2076-3417/15/7/3960

- ChatGPT’s Impact On Our Brains According to an MIT Study – Time Magazine, accessed July 31, 2025, https://time.com/7295195/ai-chatgpt-google-learning-school/